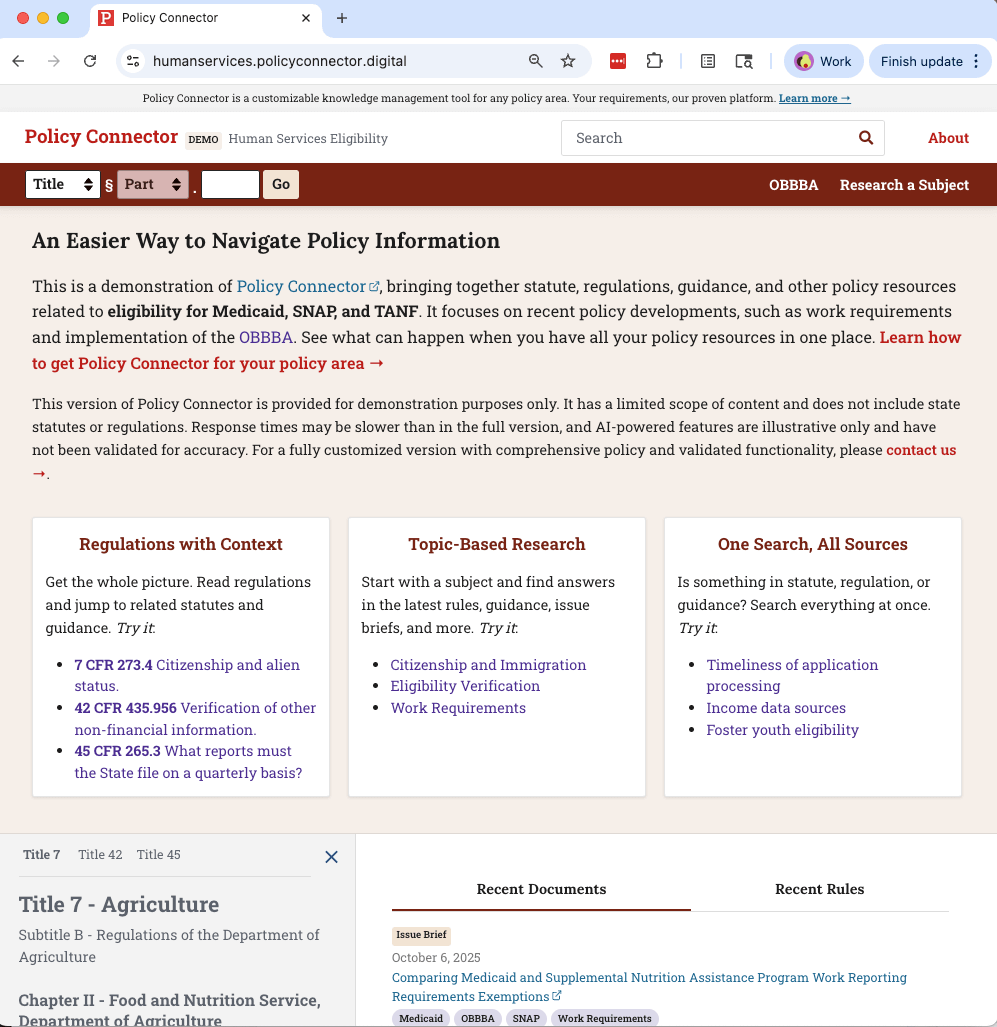

Policy Connector is a web app that saves policymakers time by bringing together statute, regulations, and guidance.

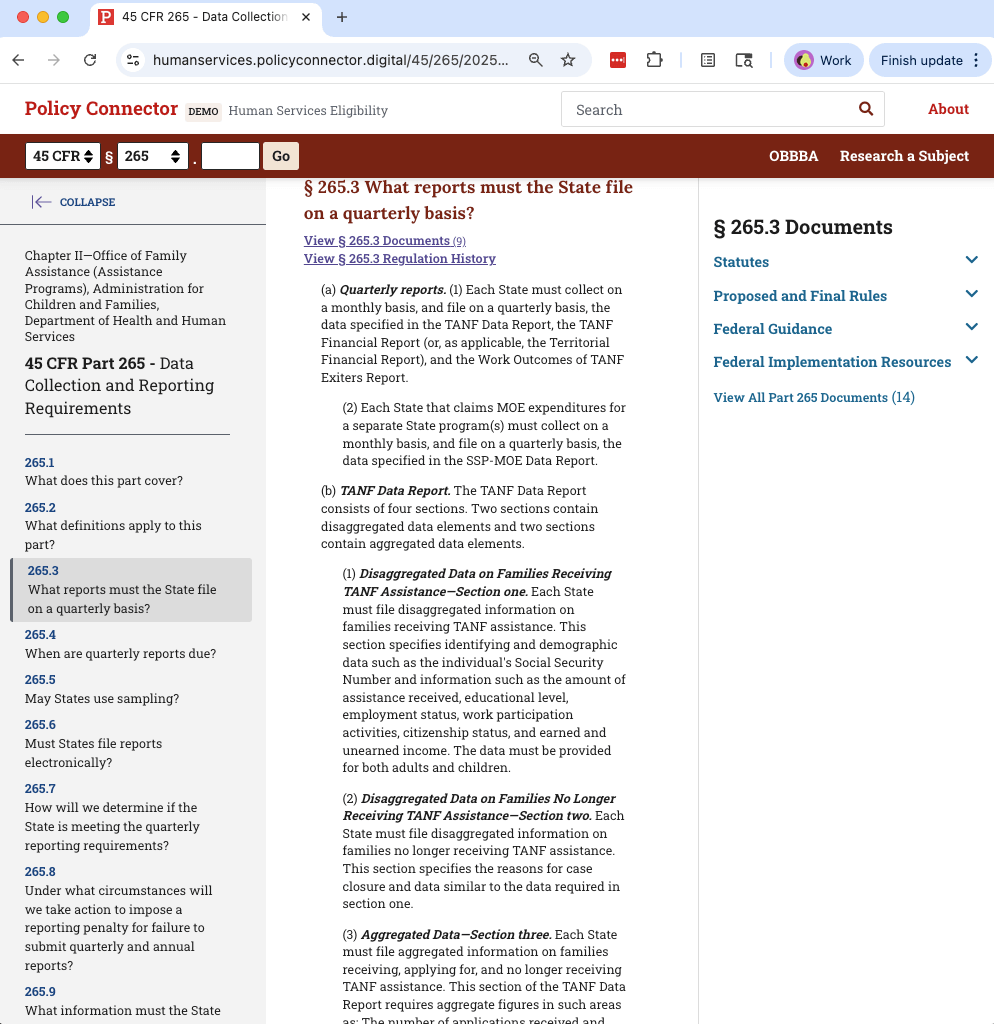

My three-person team maintains a web application that makes policy work easier for a group of government staff by integrating statute, regulation, rules, guidance, and internal policy materials into a robust research platform. It works well, and our job is to keep the gears oiled and make incremental improvements. We’re empowered to create ambitious new features, but only if we have capacity to implement them.

Our application is a client-specific implementation of A1M’s Policy Connector. With the flurry of interest in artificial intelligence (AI), we asked ourselves if Policy Connector and our application could benefit from the new capabilities rapidly becoming available. Finding the right solutions would mean setting aside the hype and taking a different approach—a boring one.

Using our methodical approach, we've added several artificial intelligence (AI) and machine learning (ML) features, using existing libraries and services.

You can try a demo of Policy Connector yourself.

Here's what we implemented and what we learned.

This application is largely composed of off-the-shelf components: open source frameworks and tools including Django, PostgreSQL, and Vue, deployed with GitHub Actions and AWS CDK, and running on AWS services such as RDS, S3, and Lambda. This reflects our “boring” strategy for delivering excellent value to the government and providing a custom-fit solution at a low operating cost: assemble mature, inexpensive components using human centered design (HCD), agile, and DevOps best practices. We approach AI/ML the same boring way: integrate services and tools to meet user needs.

Our approach for adding new functionality includes:

Identify unmet user needs. We reviewed past user research and our backlog of ideas, keeping in mind newer AI/ML tools and techniques that could make an old idea more feasible. We listened to users and stakeholders who asked "could AI do X?", because those ideas are expressions of unmet needs, which is valuable input no matter whether AI turns out to be the best solution.

Find out what’s available. Since this is a federal government project, we’re limited to using approved services, so an important early step is to learn what’s on the list. For example, we determined we could use several Amazon Bedrock APIs that cost very little when used at a small scale.

Ask ourselves: Could we solve this without AI? Is Generative AI the right form of AI/ML for this? We always look for solutions that are straightforward to build, inexpensive to operate, and low risk, so it’s worth checking whether there’s a simpler option instead of going straight to a large language model (LLM). For us, the strengths and weaknesses of generative AI make it appropriate for some use cases, such as brainstorming, and too much of a risk for others. Users of our application are policy subject matter experts who trust our platform to give them accurate, reliable information, and we worked hard to earn that trust, so we’d need to be extremely careful and do a lot of testing if we wanted to deploy generative AI in user-facing formats.

Then figure out: What’s the smallest, simplest thing we could try to see if it helps? This is a best practice in human-centered design and agile methodologies.

For example, let’s say you have long lists of important documents, and people want summaries of the documents to make the lists easier to navigate, but there are far too many documents to allow writing summaries by hand. Instead of going straight to generating summaries, consider:

Integration is much more feasible and sensible than building a separate AI assistant.

So what did we end up implementing? Did AI end up proving useful? Here are the improvements our process led us to and why we think they’re the right fit.

We use these tools to speed up repetitive coding tasks, such as updating tests, writing boilerplate code, and migrating between versions of frameworks. We also use them to make quick interactive prototypes for potential new features.

A small team works best if each member can serve as a generalist, and LLM coding tools have supported this product manager in expanding my UX design and front-end development skills, while supporting our front-end developer in expanding his full-stack development skills. We combine this approach with a high standard of human code review, because our primary responsibility is to provide a stable, maintainable, and sustainable application.

This open-source tool detects file types so we can route documents to the appropriate text extraction tools for search indexing.

This service extracts text from PDFs and images, including scanned documents without selectable text, to enable searching the contents of those documents. OCR is a classic and incredibly valuable application of machine learning.

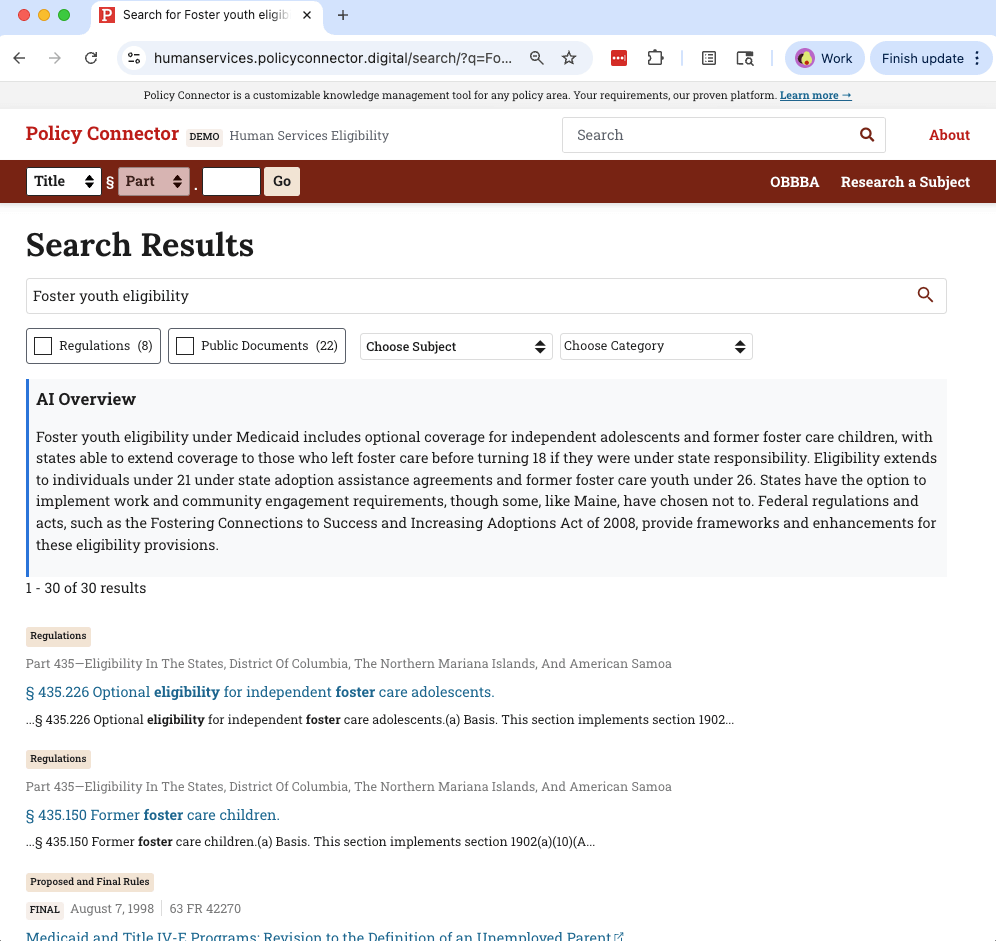

We use PostgreSQL full-text search, which is cost-effective and highly customizable but doesn’t have the most effective ranking algorithm. We used Titan Text Embeddings to generate embeddings (numerical representations) for documents that we can compare with search queries using the pgvector plugin (available in AWS RDS Aurora, which is what we use). We combine traditional and semantic search rankings to improve search relevance beyond simple keyword matching.

We built a prototype that summarizes in-app search results using a model from Amazon Bedrock. This was easy and interesting, but we only run it as a demonstration at this time, not in production. Considering the risk of inaccurate summaries damaging our users’ trust, we’d first need to do user research to evaluate whether these summaries are actually helpful to our users. Currently, our team doesn’t have capacity to do deep user research as a small operations and maintenance team. But this kind of capability is something that could make an appearance in future Policy Connector implementations, if the need is there.

Our client organization has its own AI assistant chatbot that staff can use, managed by an internal team that takes care to train staff on using it effectively. This in-house tool is based on a commercial LLM, and it has the option to integrate additional information from other sources. We’re looking into making the data from our application available to this existing system, to enable augmenting the general-purpose training data with up-to-date policy information relevant to our staff users. Integration is much more feasible and sensible than building a separate AI assistant.

It can be hard to see through the hype around AI. With a “boring” approach, though, teams can find value and implement useful improvements. AI and ML tools are helping our small team continue improving a mature product. We've added better search, better file processing, and development assistance by integrating existing services. By combining AI with a methodical implementation strategy, we can experiment with and deploy features that would have required more significant engineering time just a few years ago.

Kristine, founder and CEO, aligns the vision and strategy of A1M with our purpose. With 25 years of federal healthcare industry experience, she founded A1M to promote humane and sustainable change.

We are dedicated to creating simple, sustainable solutions. Learn more about our Services.